Is your business focused on delivering new features faster? To do so, are you implementing a continuous integration and continuous delivery (CI/CD) pipeline? Would you like to accelerate the CI/CD process by moving code from development and user acceptance testing stages (DEV/UAT) to production stage (PROD) faster? If so, this blog is for you.

In this blog post, I’ll cover how to speed up the CI/CD process, illustrated by a use case where nClouds helped a client deliver new features faster and enhance security and compliance by implementing CI/CD automated release pipelines (using AWS CodePipeline) and a fully managed continuous integration service (using AWS CodeBuild).

Why a CI/CD pipeline is essential to your business

A modern CI/CD pipeline is table stakes for efficient software product delivery. It defines the necessary automated and manual stages (e.g., source, build, test, and deploy) of your release process workflow and describes how a new code change progresses through that process.

Let’s look at how nClouds helped one of our clients efficiently move code changes between the various stages of their release process workflow.

Customer Use Case

Our client wanted to support rapid business growth with faster delivery of new features and enhanced security and compliance. They asked nClouds to help them build a HIPAA-compliant infrastructure and implement a CI/CD pipeline to handle their increased business demand. I applied nClouds’ expertise in automating CI/CD and implementing application, cloud infrastructure, and security/compliance best practices.

- Before setting up a CI/CD pipeline, I worked with the client to optimize their application development, testing, and release process by implementing containerization and automation across the software delivery lifecycle.

- I containerized their application using Docker so that their developers could write the code in the form of packages (containers) that include all the required dependencies and configurations. It standardized the code across environments, which can then be referenced in different CI/CD stages.

- For the new infrastructure in AWS, I created an Amazon Virtual Private Cloud (Amazon VPC) with six subnets spanning two availability zones (typically, we recommend expanding to three availability zones) for high availability:

- Two public subnets for the load balancers of the internet-facing services.

- Two private subnets for the Kubernetes workers.

- Two private subnets for database and cache.

- Here’s what I implemented for enhanced security/compliance:

- Encrypted all resources that store data, to keep it HIPAA compliant.

- Included the following HIPAA-eligible services: AWS CodeBuild, AWS CodePipeline, Amazon Elastic Kubernetes Service (Amazon EKS), Amazon Elastic Container Registry (Amazon ECR), and AWS CloudFormation.

- Implemented an SSL certificate for encryption in transit.

- For application security, implemented AWS Systems Manager Parameter Store to provide a centralized place to store and manage the parameters, grant granular permissions using AWS Identity and Access Management (AWS IAM), and encrypt all sensitive parameters using AWS Key Management Service (AWS KMS). It also removes all the hardcoded values from the code with minimum impact on performance.

- Moving code efficiently from DEV/UAT to PROD: With the new infrastructure in place and services up and running, it was time to optimize the delivery pipeline by implementing automated release pipelines (using AWS CodePipeline) and a fully managed continuous integration service (using AWS CodeBuild).

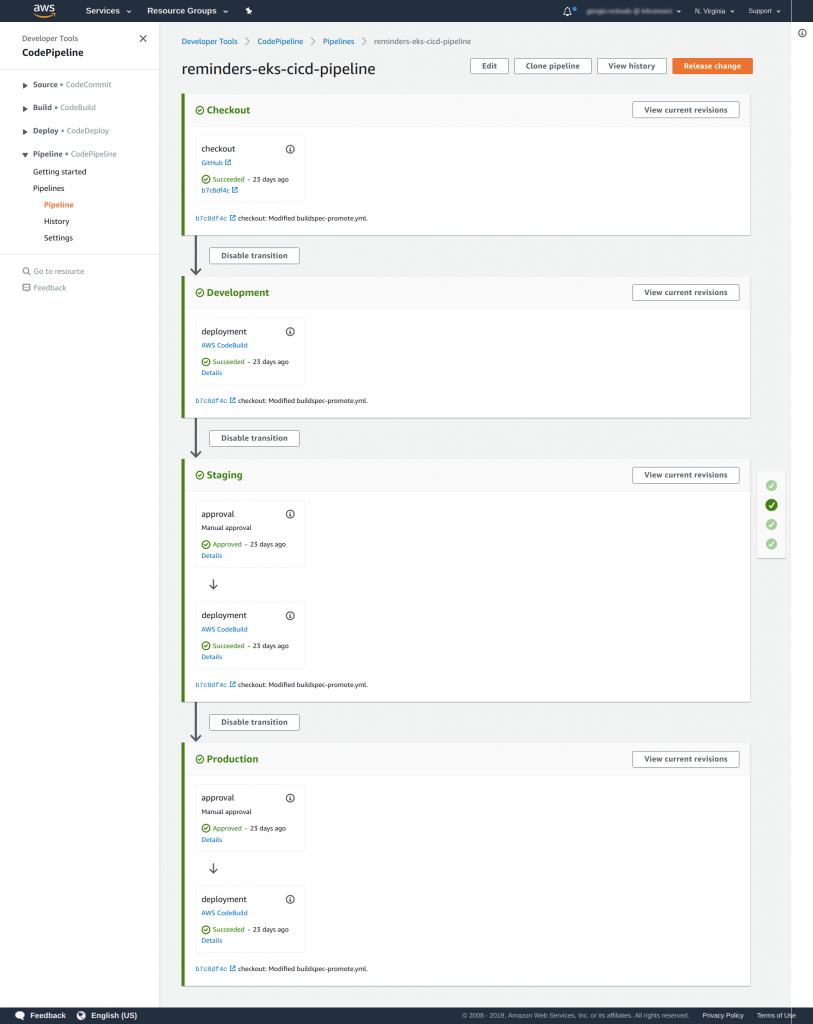

- I designed the CI/CD pipeline to build the code once before deploying to DEV, promoting the same Docker image across different environments as they were tested and validated. The result: A faster release process, as we don’t need to build the code between stages, and we don’t need to create duplicate versions of the Docker images. The client already had a GitFlow that enabled the use of promotion, so it didn’t generate any changes in the client’s process.

- In this case, I didn’t include automated unit tests as they were not present. However, I included manual approvals between the release to staging and production so they could run manual tests and, when accepted, new features could be released with a click of a button.

- This CI/CD pipeline runs sequentially, meaning that it’s an ordered set of steps to deploy the code between stages. The process will trigger when the client performs a merge of the code into the development branch. Then the code is built automatically and moves to DEV. The client is notified that the build process has started so they can perform their manual tests and approve the process.

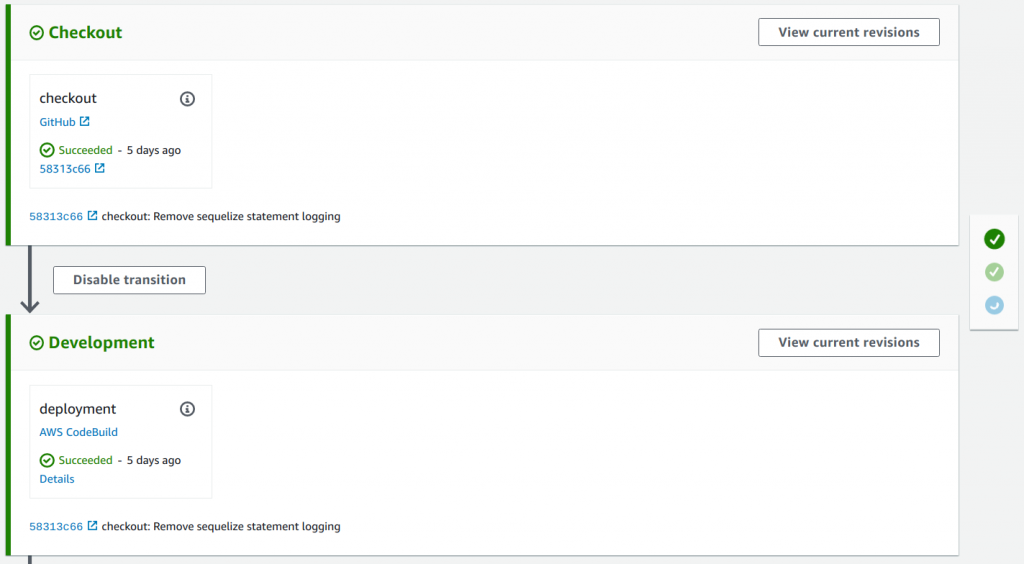

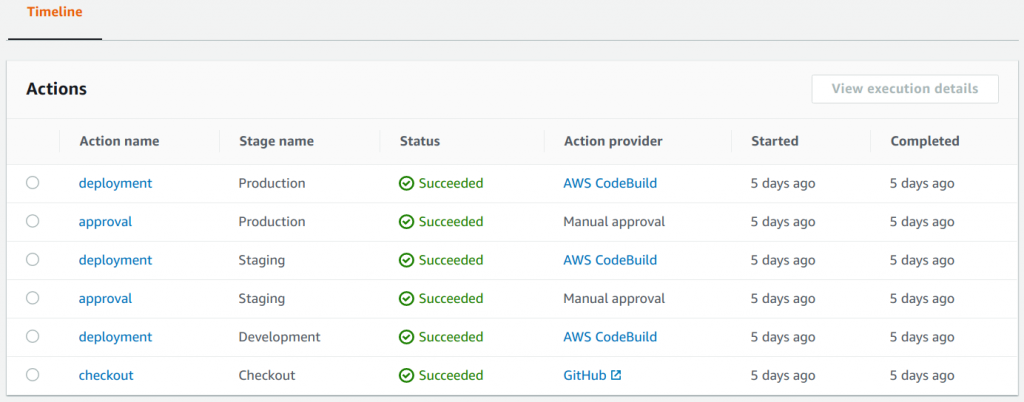

Here is an illustration of the Checkout and Development phases of the CI/CD pipeline in AWS CodePipeline:

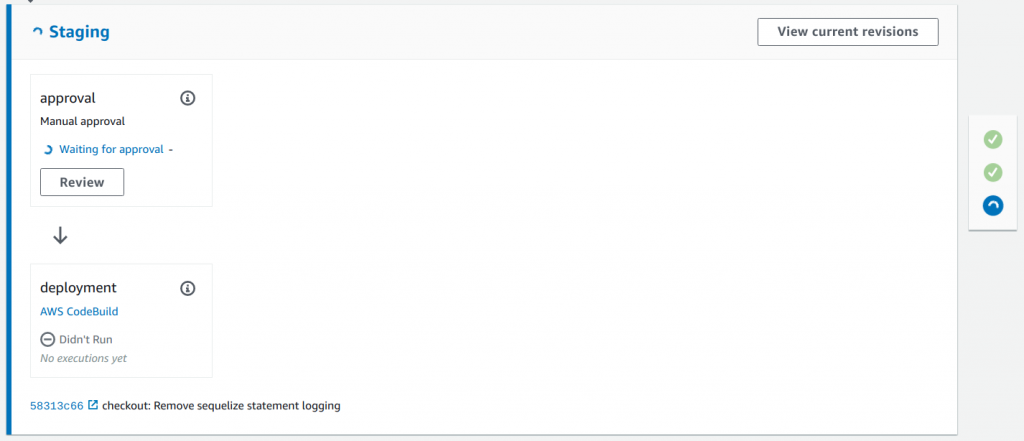

Next is the manual approval step before deploying to Staging in AWS CodePipeline:

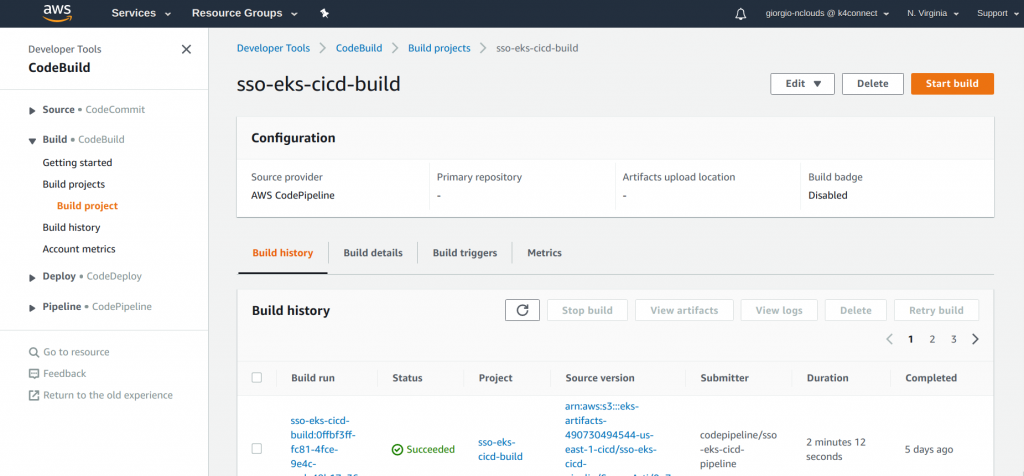

Below is the build history for the AWS CodeBuild project:

How AWS CodePipeline and AWS CodeBuild help accelerate the CI/CD process

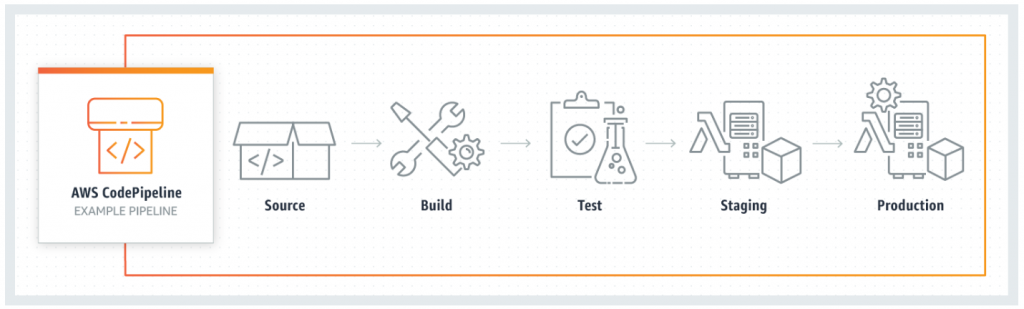

AWS CodePipeline provides a graphical user interface to create, configure, and manage the CI/CD pipeline and its various stages and actions, and to visualize and model the release process workflow. Below is an example of a pipeline using AWS CodePipeline:

Source: Amazon Web Services, Inc. AWS CodePipeline | Continuous Integration & Continuous Delivery. https://aws.amazon.com/codepipeline/

It enabled our client to speed up the CI/CD process by modeling build, test, and deployment actions to run in parallel, as shown below:

AWS CodeBuild packages the code changes and any dependencies and builds a Docker image. The Docker image is pushed to Amazon ECR after a successful build and/or test stage.

Here’s what’s great about AWS CodePipeline and AWS CodeBuild

- Automation and ease of set-up. These AWS managed services automated the client’s complete integration and deployment process. They are easier to set up and operate than non-AWS unmanaged tools.

- Flexibility. These services provide greater flexibility by allowing integration with other AWS services vs. fully managed non-AWS CI/CD offerings.

- Security. By implementing AWS IAM roles for AWS CodeBuild jobs, you can enhance security.

Steps to implement AWS CodePipeline and AWS CodeBuild

- Configure GitHub Authentication. Using CloudFormation, create a Personal Access Token in GitHub for a dedicated user for CI/CD purposes.

- Create an Amazon Simple Storage Service (Amazon S3) bucket for storing build artifacts.

- Create AWS IAM roles for AWS CodePipeline and AWS CodeBuild. Provide granular AWS IAM permissions to build the pipeline using AWS IAM roles, for both AWS CodePipeline and AWS CodeBuild.

- Grant Kubernetes permissions for AWS CodeBuild’s AWS IAM role. This allows the AWS CodeBuild job to apply changes to the cluster.

- Create buildspec.yml files. Add the build steps to the buildspec.yml file, used by the AWS CodeBuild jobs to perform the build of the code. For my use case, I created two buildspec.yml files, one for building and pushing the Docker image to Amazon ECR, and another one to perform the promotion between environments.

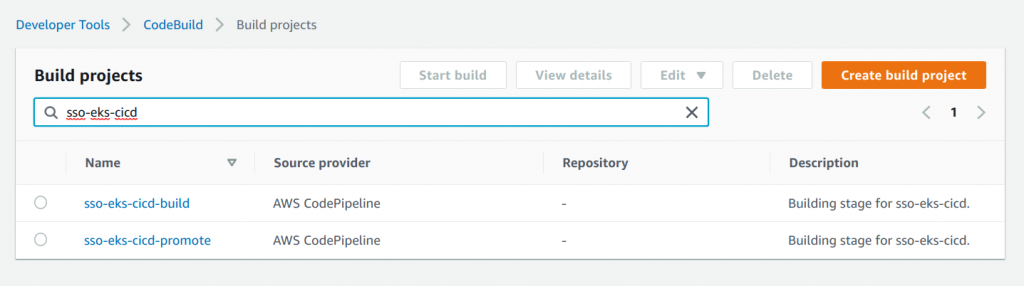

- Create AWS CodeBuild projects. For my use case, I created two projects, one for the initial build that generates the Docker image and pushes to Amazon ECR, and another AWS CodeBuild job that performs the promotion of that image between environments.

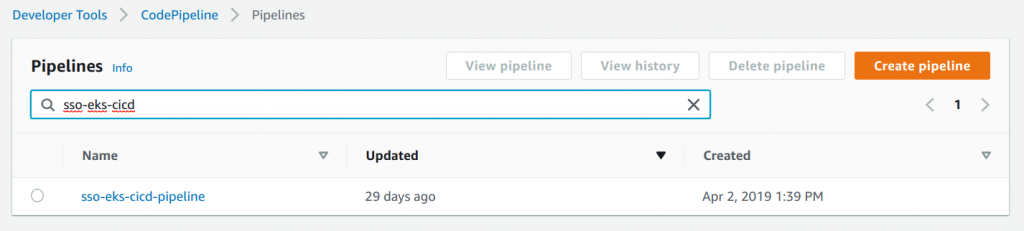

- Create a CI/CD pipeline using AWS CodePipeline. Integrate all the configurations from the previous steps. Add the steps sequentially, include manual approval steps for Staging and Production, and the build and promotion of the Docker image where necessary.

- Test the process. Test the entire process, releasing changes to validate that the pipeline behaved as expected.

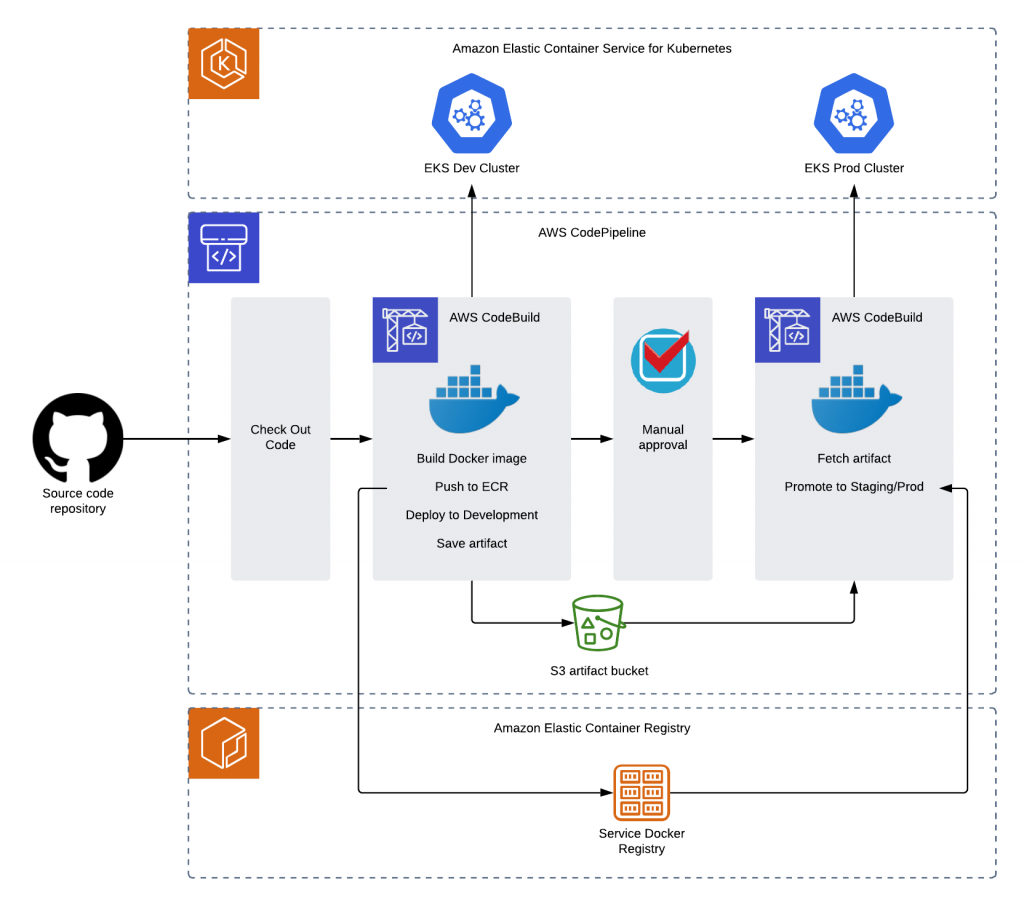

Below is an illustration of the entire accelerated CI/CD process in AWS CodePipeline:

How everything works together

As illustrated in the diagram below:

- AWS CodePipeline checks out source code for the pipeline directly from GitHub.

- AWS CodeBuild builds the source code into a releasable artifact (Docker image), tests it, deploys it to Amazon EKS development, and saves it.

- Amazon EKS updates the deployment Pods (groups of one or more Docker containers with shared storage/network) using a rolling update strategy by picking the images from Amazon ECR automatically.

- Amazon ECR stores, manages, and deploys the Docker container image.

- Manual approval helps ensure that only approved changes are deployed to production.

- After manual approval is performed, AWS CodeBuild fetches the artifact from Amazon ECR and promotes it to Amazon EKS staging/production.

Here are a few best practices

- Configure server-side encryption to protect data at rest for artifacts stored in Amazon S3 for AWS CodePipeline by using AWS Key Management Service-managed server-side encryption keys (SSE-KMS).

- Configure GitHub authentication for the source repository.

- Use promotion between environments to reuse the same Docker image.

And beware of these “gotchas”

- Grant access to AWS CodeBuild for Kubernetes. Kubernetes is an external service with its own permissions, even if used with Amazon EKS. For this reason, you must grant access to the AWS CodeBuild role that will perform the service updates in the aws-auth ConfigMap within Kubernetes.

- AWS services rely on your implementing certain other AWS services, so be particularly careful with security.

- Use AWS CloudFormation for infrastructure as code, use an Amazon S3 bucket to store the artifacts, and implement AWS IAM roles.

- Provide enough access to the AWS IAM roles for AWS CodeBuild and AWS CodePipeline and apply an adequate Amazon S3 bucket policy to the artifact bucket.

- In my use case, I had to grant adequate permissions in GitHub (the client’s choice for source code repository), add server-side encryption to the Amazon S3 bucket used for artifacts, grant granular permissions through an AWS IAM role to AWS CodeBuild, and configure the Amazon EKS cluster to grant access to the right AWS IAM role for the deployment.

How do you decide which CI/CD tools are best for your use case?

Here are the factors we consider at nClouds when determining whether to use AWS CodePipeline and AWS CodeBuild vs. non-AWS CI/CD tools:

- Implementation complexity. AWS CodePipeline and AWS CodeBuild fall in the middle between simple and complex implementation. You don’t have to configure and provision all your infrastructure to support the CI/CD pipeline. That said, you do need to integrate these services with other AWS services to get full functionality, which makes it a bit harder to implement than fully managed CI/CD offerings.

- Security. Compare AWS CodePipeline and AWS CodeBuild with unmanaged or fully managed non-AWS CI/CD tools:

- AWS CodePipeline and AWS CodeBuild easily integrate with other AWS services – a plus for security since we can use AWS IAM roles instead of access keys from an IAM user. Therefore, key rotation is managed by default, and you can focus on granting the granular AWS IAM permissions that our jobs will need.

- CI/CD tools that are not managed at all would be in your infrastructure, so you’d usually put them on Amazon Elastic Compute Cloud (Amazon EC2) instances where you can use IAM roles.

- Fully managed CI/CD tools manage all the underlying infrastructure, so you’d need to use AWS access keys (which you’d need to manage and rotate regularly).

- Flexible pricing. AWS CodePipeline pricing is determined by the number of active pipelines you have during a month, charged at $1 each. AWS CodeBuild pricing is determined by the resources you use and the time it executes. While pricing is more complex than fully managed solutions, you get more flexibility. You may have additional charges for other AWS resources that you use in your CI/CD pipeline.

In conclusion

If your business requires fast delivery of new features like most of our clients, then you can help your teams go faster while also enhancing security and compliance by implementing CI/CD with AWS CodePipeline and AWS CodeBuild.

Need help with implementing CI/CD? The nClouds team is here to help with that and all your AWS infrastructure requirements.